Dataset Resource:

- https://www.tugraz.at/index.php?id=22387

Dataset Overview

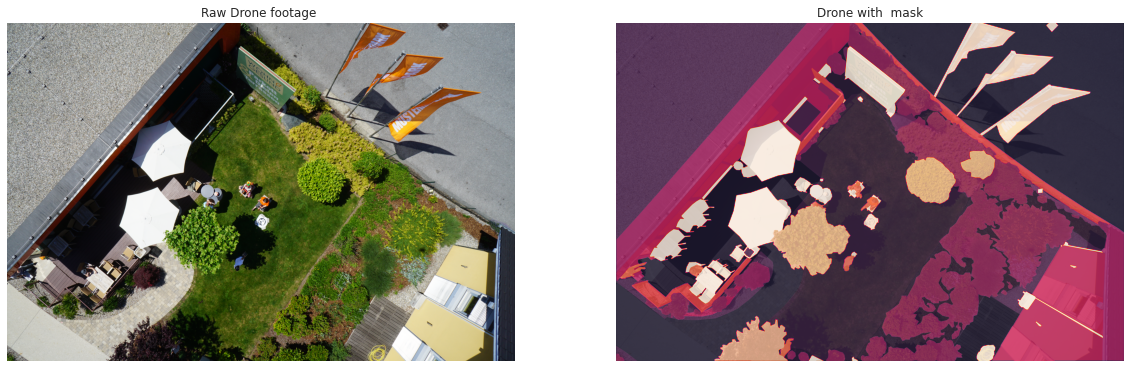

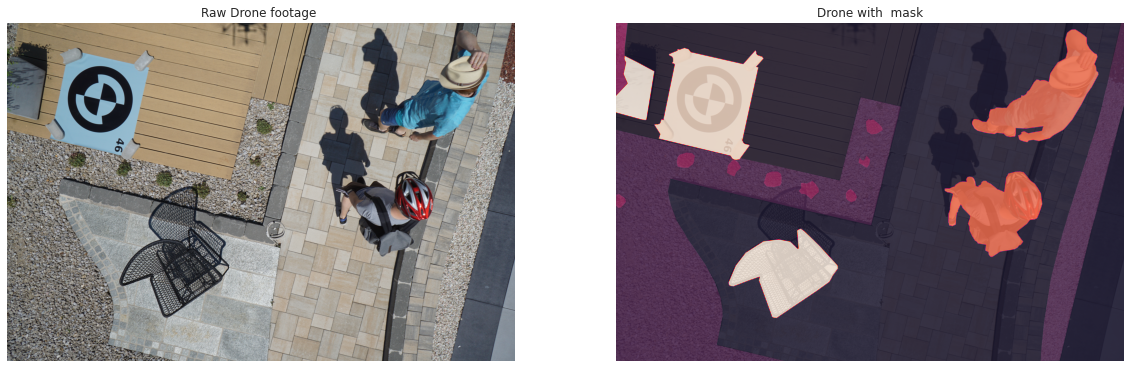

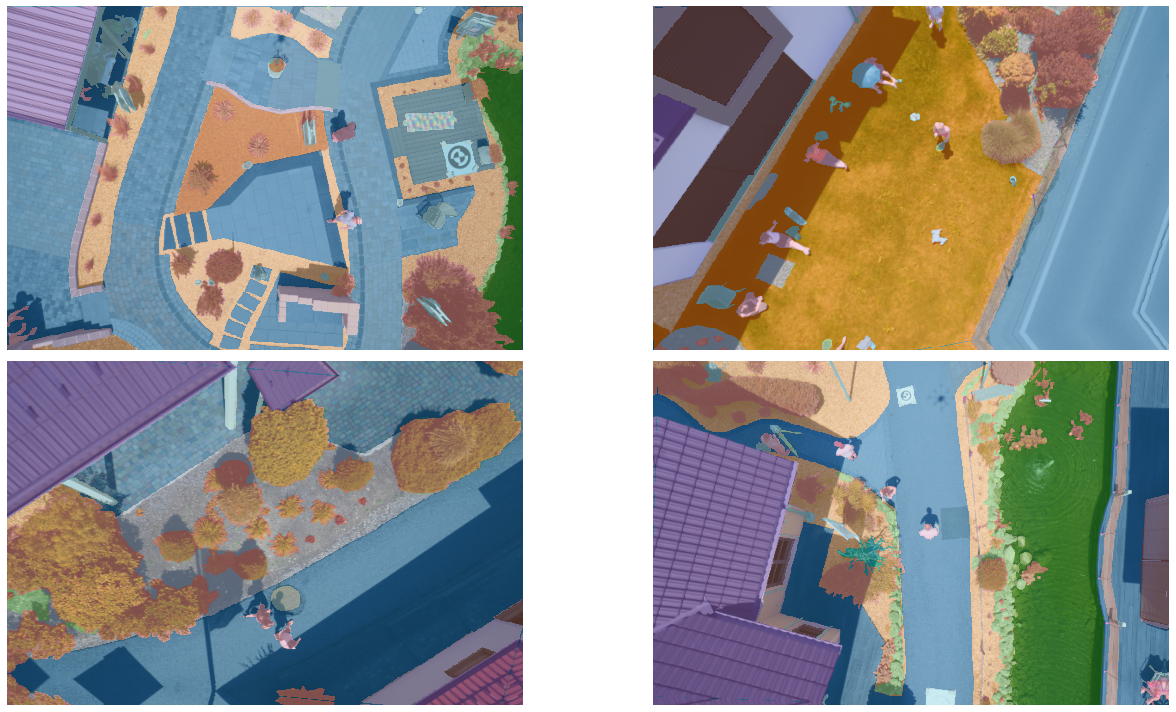

The Semantic Drone Dataset focuses on semantic understanding of urban scenes for increasing the safety of autonomous drone flight and landing procedures. The imagery depicts more than 20 houses from nadir (bird’s eye) view acquired at an altitude of 5 to 30 meters above ground. A high resolution camera was used to acquire images at a size of 6000x4000px (24Mpx). The training set contains 400 publicly available images.

| Task | Colab

|—|—|

| Colab Notebook for Aerial Semantic Segmentation : Running yourself |

***

Aerial Semantic Segmentation Drone Sample Images with mask

What is semantic segmentation ?

-

Source: https://divamgupta.com/image-segmentation/2019/06/06/deep-learning-semantic-segmentation-keras.html

-

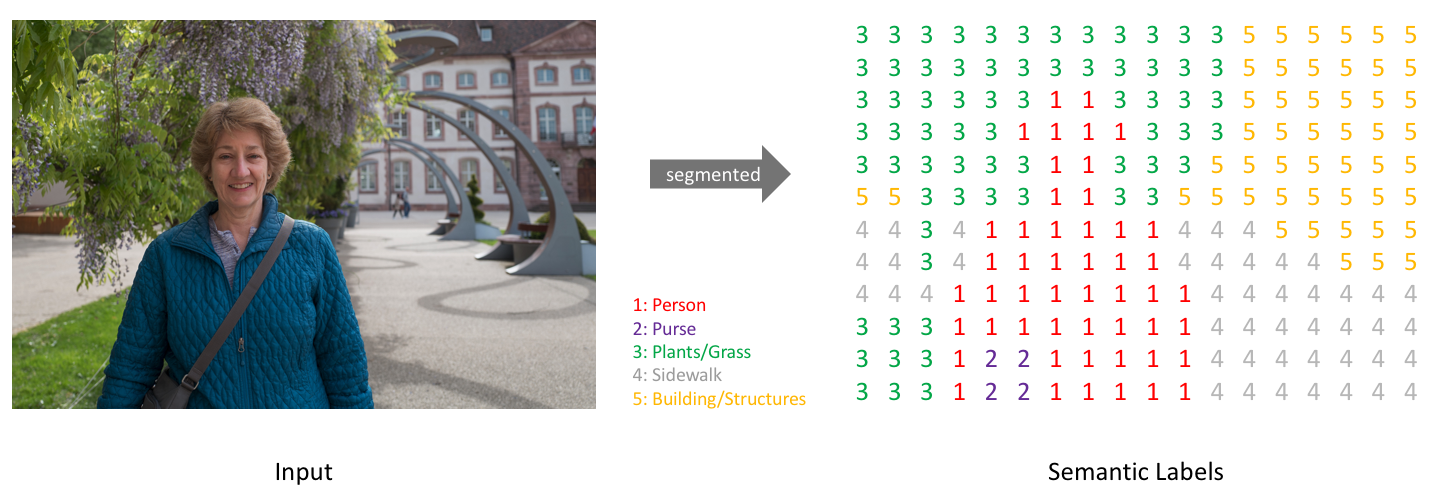

Semantic image segmentation is the task of classifying each pixel in an image from a predefined set of classes.

In the following example, different entities are classified.

In the above example, the pixels belonging to the bed are classified in the class “bed”, the pixels corresponding to the walls are labeled as “wall”, etc.

In particular, our goal is to take an image of size W x H x 3 and generate a W x H matrix containing the predicted class ID’s corresponding to all the pixels.

Usually, in an image with various entities, we want to know which pixel belongs to which entity, For example in an outdoor image, we can segment the sky, ground, trees, people, etc.

Github Repo :

- Github Repo : Link Here

Data block to feed the model

- Created using fastai datablocks API.

data = (SegmentationItemList.from_folder(path=path/'original_images') .split_by_rand_pct(0.1) .label_from_func(get_y_fn, classes=codes) .transform(get_transforms(), size=src, tfm_y=True) .databunch(bs=4) .normalize(imagenet_stats)) data.show_batch(rows=3)

Model | unet_learner

Fastai’s unet_learner

-

Source Fast.ai

-

This module builds a dynamic U-Net from any backbone pretrained on ImageNet, automatically inferring the intermediate sizes.

-

This is the original U-Net. The difference here is that the left part is a pretrained model.

-

This U-Net will sit on top of an encoder ( that can be a pretrained model – eg. resnet50 ) and with a final output of num_classes.

void_code = -1

def accuracy_mask(input, target):

target = target.squeeze(1)

mask = target != void_code

return (input.argmax(dim=1)[mask]==target[mask]).float().mean()

arch = pretrainedmodels.__dict__["resnet50"](num_classes=1000,pretrained="imagenet")

learn = unet_learner(data, # DatBunch

arch, # Backbone pretrained arch

metrics = [metrics], # metrics

wd = wd, bottle=True, # weight decay

model_dir = '/kaggle/working/') # model directory to save

Training :

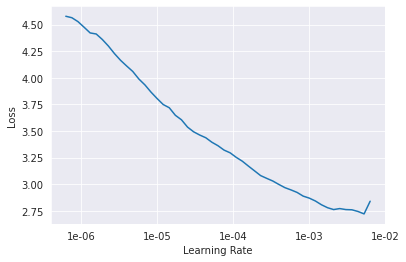

- Learning rate : Used fastai’s lr_find() function to find an optimal learning rate.

learn.lr_find()

learn.recoder.plot()

callbacks = SaveModelCallback(learn, monitor = 'accuracy_mask', every = 'improvement', name = 'best_model' )

learn.fit_one_cycle(12, slice(lr), pct_start = 0.8, callbacks = [callbacks] )

| epoch | train_loss | valid_loss | drone_accuracy_mask | time |

|---|---|---|---|---|

| 0 | 2.106114 | 1.835669 | 0.483686 | 00:16 |

| 1 | 1.697477 | 1.331731 | 0.618320 | 00:12 |

| 2 | 1.462504 | 1.227808 | 0.659259 | 00:13 |

| 3 | 1.455247 | 1.147135 | 0.691440 | 00:12 |

| 4 | 1.336305 | 1.115214 | 0.691359 | 00:12 |

| 5 | 1.247270 | 1.168395 | 0.669359 | 00:12 |

| 6 | 1.310875 | 1.181834 | 0.672961 | 00:13 |

| 7 | 1.196860 | 1.115905 | 0.708513 | 00:12 |

| 8 | 1.131353 | 0.888599 | 0.778729 | 00:12 |

| 9 | 0.983422 | 0.799664 | 0.851884 | 00:12 |

| 10 | 0.973422 | 0.779664 | 0.881884 | 00:12 |

| 11 | 0.953422 | 0.749664 | 0.911884 | 00:12 |

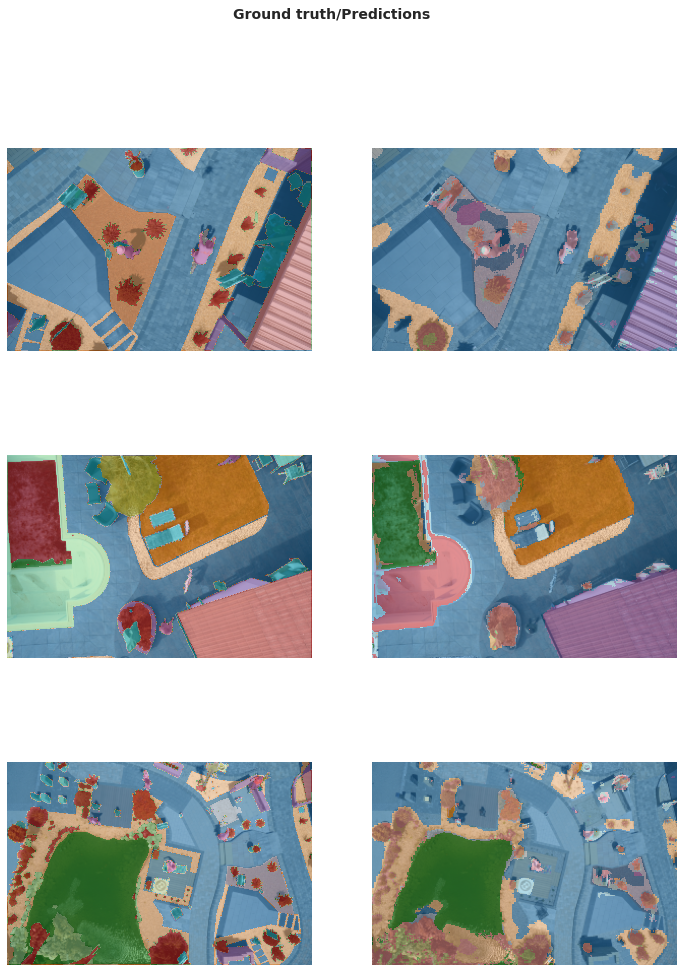

Results |

Intial dynamic unet on top of an encoder ( resnet34 pretrained = ‘imagenet’ ), trained for 10 epochs gave an accuracy of 91.1% .

learn.show_results(rows=3, figsize=(12,16))